Opus 4.5

— changed how I interact with my craft.

a year ago

About a year ago I wrote about how I quit using LLMs—tl;dr I found that Copilot tab completions were generally unhelpful at anything that’s not trivial frontend code.

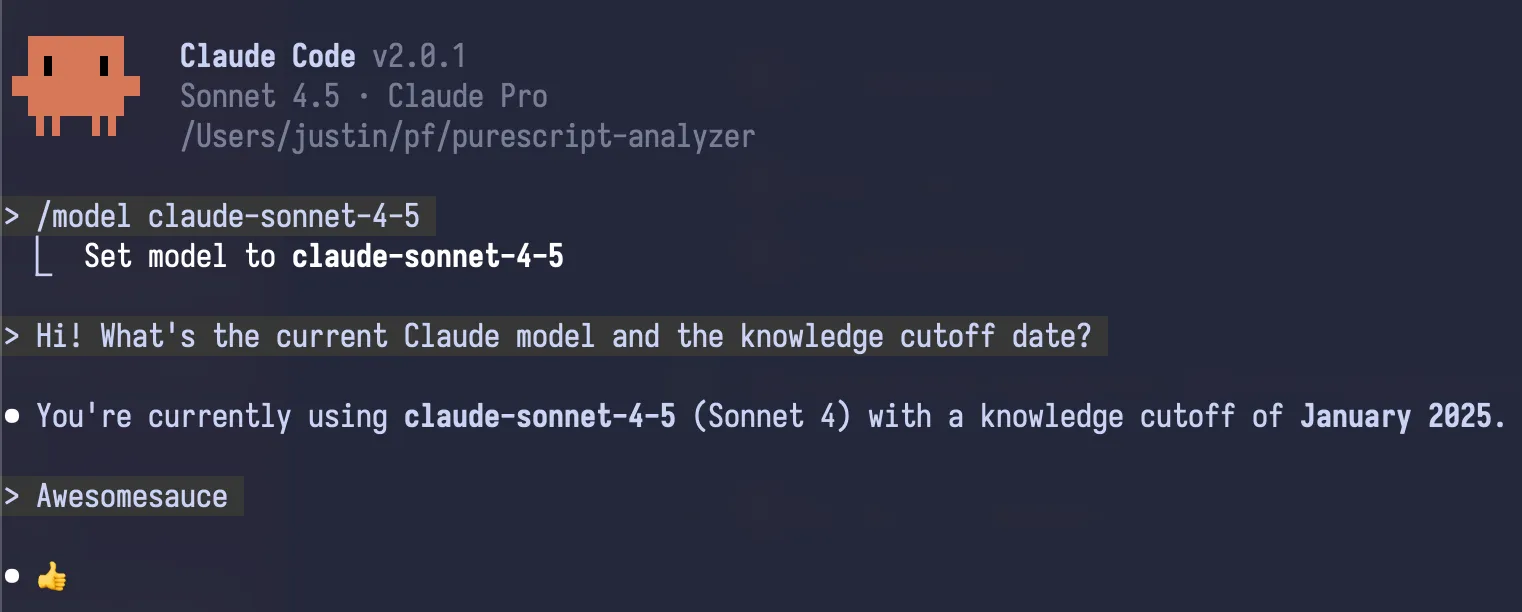

Just a few days after I published the post, Anthropic released Claude Code alongside 3.7 Sonnet; still fresh off LLM fatigue, especially with the harder problems I tried throwing at it, I mostly dismissed Claude Code until mid-2025, right around the release of Claude 4. Then Sonnet 4.5 dropped, and I was hooked.

Sonnet 4.5 within the confines of its harness was the first LLM that gave me an ‘oh shit’ moment. I mostly used it on a pretty large fullstack PureScript codebase and the jump in intelligence from Sonnet 4 to Sonnet 4.5 was significant.

The moment was pretty similar to Theo’s initial reaction with GPT-5, minus the whole fiasco with GPT-5’s eventual rollout not living up to the hype. I’ve actually actively avoided GPT models since then; I’m willing to give OpenAI another shot but I’ll wait until they get their shit together with the model naming though (maybe in GPT-5.3?)

Sonnet 4 confused PureScript and Haskell syntax from time to time, and it also had a tendency to confuse the : and = symbols when working on files that had record literals and record update syntax close together.

If my memory serves right, Sonnet 4.5 never produced a syntax error for all of the PureScript code that it wrote. Fewer trivial mistakes means more tokens saved for other problems (Opus 4.5 follows through with this pretty well!)

All things considered my setup was pretty vanilla; I didn’t really buy into the “here’s the super secret CLAUDE.md tricks that you should try today!“

one tricky thing with evaluating ai workflow tools is that you don’t know which of their authors are actually deranged and live in fantasyland and which are in firm contact with the reality

— dan (@danabra.mov) January 4, 2026 at 9:03 PM

Fast forward to today, I’ve used Opus 4.5 for the past few weeks through Amp Code and then through Claude Code, which made more sense economically for the amount of tokens I was burning through.

I think that Amp is doing something really special with treating threads as first-class data, but that’s a topic for a future post.

opus 4.5

I have mixed feelings about Opus 4.5; it absolutely rocks as a development and research tool, and I feel that my usage patterns with it have accelerated my learning process in a significantly measurable way.

It can write code faster than I can, and for the most part can adapt to what I would’ve written in the first place. It’s not without its faults though.

Even with all of its “intelligence”, the fact remains that it’s a Large Language Model that will actively seek the most plausible-sounding, most-agreeable solution.

I’d be a millionaire if I had a dollar every time Opus 4.5 concluded that X is the fix, rather than actively determining what Y caused it in the first place.

I can understand it for application development; I’ve definitely spot-patched bugs before instead of addressing the underlying problem.

I’ve found that this tendency is detrimental to my development style though, especially for projects like the purescript-analyzer.

feedback loops

One thing I did appreciate with Opus was how well it performed with a well-crafted feedback loop.

The purescript-analyzer codebase has snapshot tests for checking the behaviour of the type checker, and the snapshots are designed to be read by a human reviewer. Giving Opus snapshot tests to review is the compiler development equivalent of giving it browser access to debug rendering.

It’s insane how much value you can pull from a set of well-defined tests.

---

source: tests-integration/tests/checking/generated.rs

expression: report

---

Terms

f :: forall (t3 :: Type) (t6 :: Type). t6 -> t3

g :: forall (t3 :: Type) (t6 :: Type). t6 -> t3Be sure to check out the amazing insta crate by mitsuhiko.

Large Language Models are not thinking machines in that they mimic how we process information. Our working memory is individually different in how much we can retain or how fast we can ingest context.

Opus is not bounded by reading speed; I’ve given it hundreds of lines of compiler logs and it was able to identify most bugs faster than I can even build the recursive context required to navigate a call stack.

Honestly, if you’re working on a compiler, the best gift you can give yourself is to write a pretty printer and use a structured logging library.

what’s next

Opus changed how I fundamentally interact with programming, and the work that I’m doing with it is practically unrecognizable from my earliest memories of the craft.

I remember digging into Nix source code just to figure out how to get a statically linked version of GHC to compile musl binaries with, spending hours upon hours just debugging.

Now… I can just ask the hungry ghost trapped in a jar to look it up for me.

It’s more important than ever to find your niche in software. Anyone can conjure up a half-decent React app now. Writing code is no longer the bottleneck, that ship has sailed a long time ago.

Frontier models may get good enough in the following years to render me obsolete, but I will never allow it to diminish my love for the pursuit of solving hard problems.